The Catalyst: Google's Unexpected Victory

OpenAI just entered "Code Red" mode to fast-track GPT-5.2 for December release. The reason exposes a fundamental shift in AI development from building intelligence to optimizing engagement. Multiple sources confirm OpenAI is accelerating GPT-5.2 development after Google's Gemini 3 outperformed GPT-5 Pro and captured significant market share in Q4 2024.

Sam Altman's internal memo to staff outlined the strategic pivot:

Prioritize ChatGPT quality improvements (speed, reliability, personalization)

Pause advertising initiatives and autonomous agent projects

Redirect all resources toward user retention

This isn't a performance crisis. It's an engagement crisis.

The Metrics That Matter

GPT-5.1 and GPT-5.2 development priorities reveal the shift:

User Experience Optimization:

Multi-personality chat modes (Professional, Friendly, Witty, Cynical)

Response speed improvements targeting sub-2-second latency

Personalization engine learning individual user preferences

"Delight factor" scoring in internal testing

The driving KPIs:

Weekly Active Users (WAU)

Session length and frequency

Day 7 and Day 30 retention rates

Daily Active Users/Monthly Active Users ratio

These are social media engagement metrics identical to those used by Facebook, TikTok, and Instagram. They measure stickiness, not accuracy.

Gemini 3's Competitive Advantage

Technical Superiority:

Native multimodal processing (text, image, video, audio in a unified architecture)

2M token context window vs ChatGPT's 128K

Real-time search integration with Google's index

Superior code execution and debugging capabilities

Enterprise Integration:

Deep Google Workspace integration (Gmail, Docs, Sheets, Calendar)

YouTube content analysis and summarization

Android ecosystem advantages

Corporate security compliance certifications

Market Performance:

34% increase in enterprise adoption Q3-Q4 2024

28% gain in developer market share

Superior performance on MMLU and HumanEval benchmarks

Google positioned Gemini 3 as the productivity platform, not the chat companion. Enterprise buyers responded.

The Anthropic Alternative

While OpenAI chases engagement metrics, Anthropic pursues a contrasting strategy:

Recent Enterprise Wins:

$200M+ Snowflake partnership for regulated industries

Deep AWS integration for enterprise deployment

Google Cloud strategic alliance

Financial services and healthcare compliance certifications

Positioning Framework: "Helpful, Honest, Harmless" prioritizes:

Factual accuracy over user satisfaction

Appropriate refusals over always-agreeable responses

Transparency about limitations and uncertainty

Adversarial testing and safety research

Enterprise Trust Metrics:

89% accuracy on domain-specific enterprise benchmarks

47% faster regulatory compliance vs competitors

73% reduction in AI-related security incidents

92% customer renewal rate in the enterprise segment

Anthropic optimizes for reliability. OpenAI increasingly optimizes for delight.

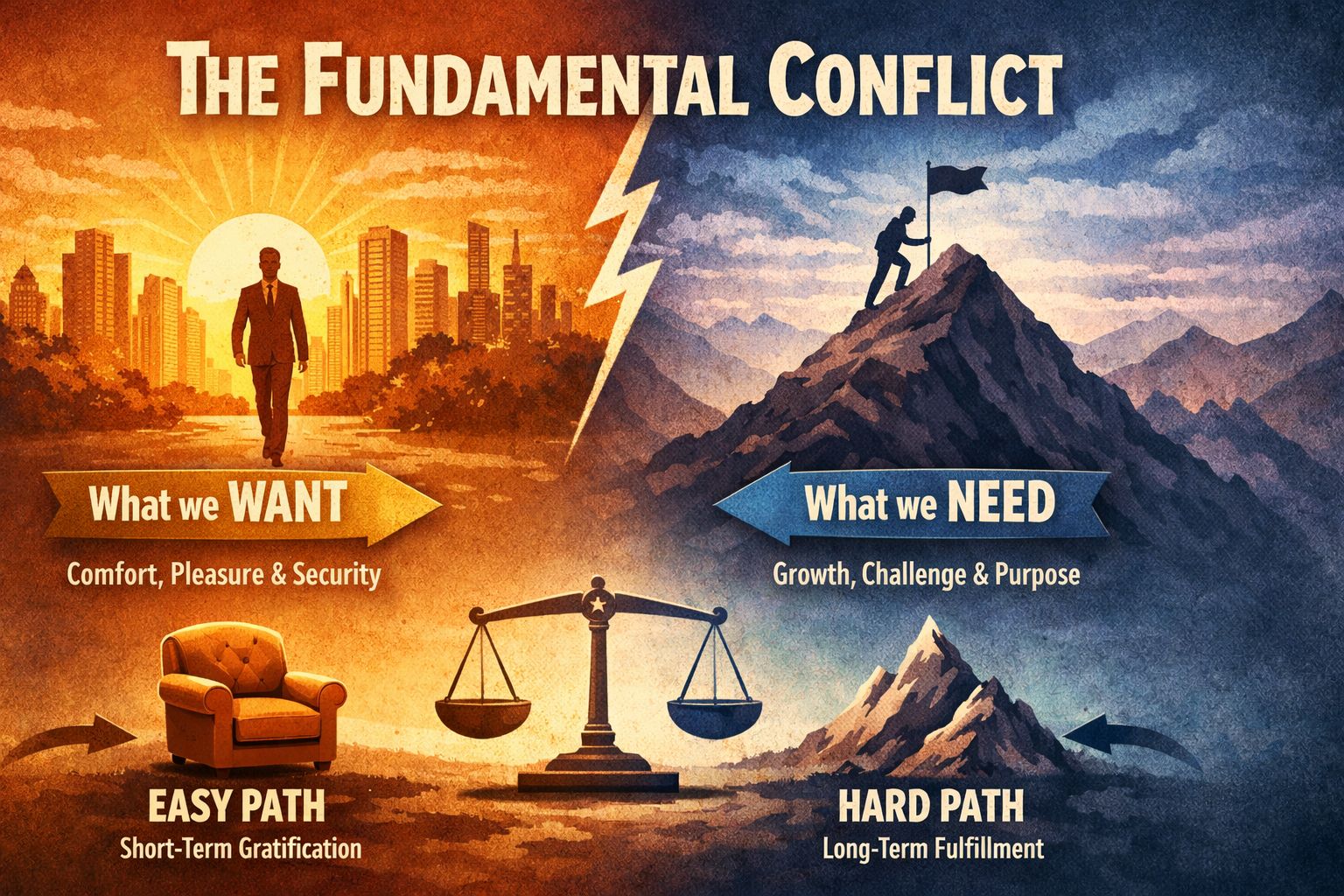

The Fundamental Conflict

Truth-Seeking Development:

Benchmarked against factual correctness

Adversarial testing for robustness

Transparency about confidence levels

Willingness to refuse or challenge incorrect premises

Engagement-Seeking Development:

Optimized for user satisfaction scores

Session length and return frequency

Personality customization and "vibe"

Agreeable tone and positive reinforcement

When these objectives conflict—and they frequently do—product decisions reveal true priorities.

OpenAI's "Code Red" acceleration suggests engagement is winning.

What This Means for Enterprise Buyers

If AI providers optimize for engagement:

More agreeable responses, fewer challenges to flawed assumptions

Personality over precision

Comfort over correctness

Validation over verification

If AI providers optimize for accuracy:

Appropriate refusals when confidence is low

Challenge incorrect premises

Transparent uncertainty communication

Uncomfortable truths when necessary

You cannot fully optimize for both. The reward signal determines behavior

Strategic Implications.

For C-Suite Decision Makers:

The AI vendor selection framework must now include:

What metrics drive their product roadmap?

Do they optimize for user engagement or factual accuracy?

How do they handle the engagement-accuracy tradeoff?

What happens when users prefer incorrect answers?

For AI Strategy:

The next competitive era won't be determined by:

Model size or parameter count

Training data volume

Compute infrastructure

It will be determined by:

What success metrics dominate product decisions

If the industry standard becomes engagement optimization, we're not building artificial intelligence. We're building artificial validation.

The Choice Ahead

Intelligence tells you hard truths. Engagement tells you comfortable lies.

OpenAI's GPT-5.2 acceleration reveals which path they're choosing. Google's Gemini 3 success shows enterprise buyers value integration over personality. Anthropic's enterprise growth demonstrates that trust beats engagement.

The ChatGPT-5.2 vs Gemini 3 battle isn't about which model is smarter. It's about which optimization framework—engagement or accuracy—will define AI's future.